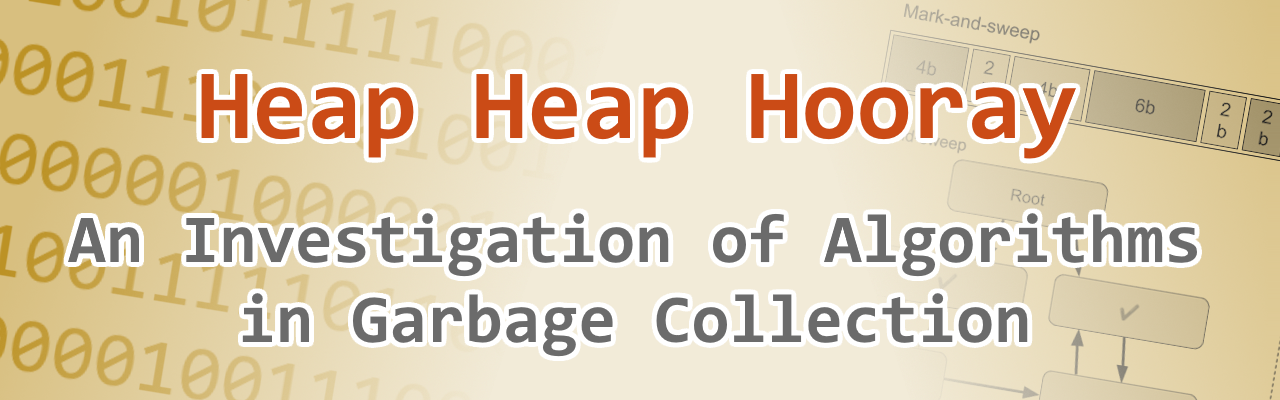

Project Summary

The MiniJava compiler was a project for the Compiler Theory course that followed Andrew Appel’s Modern Compiler Implementation in Java. This compiler, which we’ve named “MJC” (“MiniJava Compiler”), compiles MiniJava source code and produces assembly code for the SPARC architecture.

MJC includes most of the common phases of code compilation: lexing/parsing, type-checking (semantics), translation (intermediate representation), assembly code generation, register allocation, and lastly the output step. The assembly output can then be linked alongside our compiler runtime (CRT for short) to produce an executable file.

For the purposes of the Compiler Theory course, MiniJava is a sufficient language for a simple compiler. It is a subset of the Java programming language, and while it does contain powerful features such as class inheritance, it lacks other Java features such as the null keyword, and functions can only return integer values, objects cannot escape functions, etc.

For research purposes, MiniJava does not provide a great environment. As with Java, there is no way to manually free memory. However, MiniJava does not contain a garbage collector out-of-the-box as Java does, so all memory allocations are leaked and will never be freed.

As part of this project, we have implemented garbage collection in the CRT using the C programming language. We chose to implement a few unique methods of garbage collection to allow us to test configurations and determine which methods perform best for MiniJava source code, based on which data structures and algorithms are exhibited by the source code. We hope that our findings here can be applied to other programming languages.

Our garbage collector supports: reference counting, mark-and-sweep, copying, and generational garbage collection algorithms.

Project Objective

Heap Heap Hooray's goal was to demystify garbage collection in order to make it easier for the average user to understand.

Manufacturing Design Methods

We began by implementing a garbage collection algorithm and ran several test cases with it. Originally we were attempting to pass the test cases, but we found it more entertaining to try to make it fail.

When we found a fault in the algorithms, we would research, then try to implement something that would fix our previous problem.

When reference counting had the drawback of failing to detect cyclic references, we implemented mark-and-sweep. When mark-and-sweep failed to work with a heavily fragmented heap, we implemented copying. When copying's collection cycles took too long, we implemented generational.

Specification

Our software is built on the MiniJava Compiler, which was written for SPARC architecture.

QEMU is used as our SPARC emulator, and Jabberwocky is used as the containerization software to keep all necessary tools together.

Analysis

Given that the MJC garbage collector was primarily designed for educational purposes, it's most aptly compared to itself. We explored the merits and drawbacks of each algorithm, highlighting how specific ones address shortcomings in others.

Future Works

As this project was built on top of the MiniJava compiler, it would be nice to add more functionality to the compiler. Currently, the compiler does not support two-dimensional arrays or Null values, and complex algorithms are hard to execute without either of these.

Manufacturing Design Methods

We began by implementing a garbage collection algorithm and ran several test cases with it. Originally we were attempting to pass the test cases, but we found it more entertaining to try to make it fail.

When we found a fault in the algorithms, we would research, then try to implement something that would fix our previous problem.

When reference counting had the drawback of failing to detect cyclic references, we implemented mark-and-sweep. When mark-and-sweep failed to work with a heavily fragmented heap, we implemented copying. When copying's collection cycles took too long, we implemented generational.

![]()

.jpg)

.jpg)